Please enjoy this ILTA Just-In-Time blog authored by Ricci Masero, Marketing Manager, Intellek.

A one-of-a-kind study reveals that legal AI tools now outperform human lawyers in four key areas: data extraction, document Q&A, summarization, and transcript analysis. For ILTAns this represents a critical inflection point in legal technology adoption. The Vals Legal AI Report (VLAIR) also shows these tools working up to 80 times faster than lawyers, creating both efficiency opportunities and challenges for traditional billing models.

While AI still lags behind humans in redlining and complex research tasks, the evidence suggests that firms should strategically implement AI where it excels. This research clarifies exactly where AI delivers the most value and raises important questions about whether to build or buy your AI solution. As the legal profession evolves, success will come from finding the right balance between AI efficiency and irreplaceable human judgment.

The legal-tech landscape has reached a watershed moment with the release of the Vals Legal AI Report, the most comprehensive evaluation of legal AI tools to date. Using actual law firm data and a human lawyer control group, the study delivers compelling evidence that artificial intelligence can now outperform human lawyers in several critical legal tasks.

The Breakthrough: AI Surpasses Lawyers in Four Key Areas

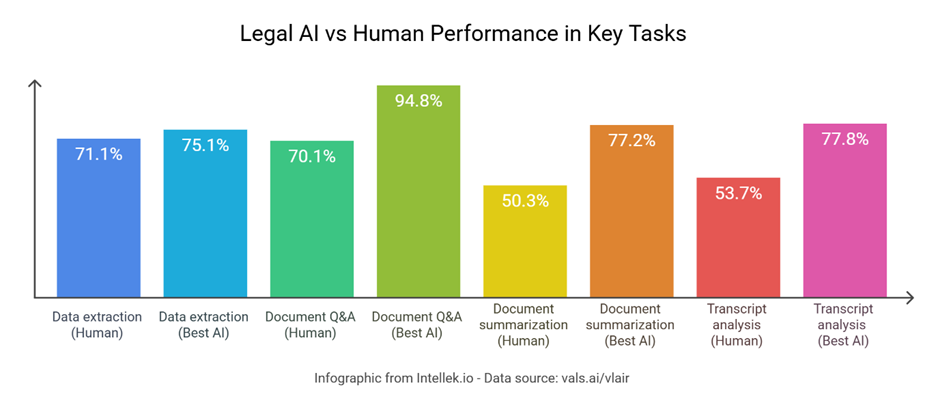

The VLAIR study evaluated four leading legal AI tools across seven common legal tasks, benchmarking their results against a lawyer control group. The headline finding is clear: AI outperformed human lawyers in four of the seven legal performance areas tested.

The four areas where legal AI tools demonstrated superiority include:

- Data extraction (Best AI: 75.1% vs. Lawyer Baseline: 71.1%)

- Document Q&A (Best AI: 94.8% vs. Lawyer Baseline: 70.1%)

- Document summarization (Best AI: 77.2% vs. Lawyer Baseline: 50.3%)

- Transcript analysis (Best AI: 77.8% vs. Lawyer Baseline: 53.7%)

Harvey Assistant emerged as the standout performer, scoring highest in five tasks and claiming the top position overall. Harvey outperformed the Lawyer Baseline in four tasks and received the highest scores across all tasks evaluated: 94.8% for Document Q&A and 80.2% for Chronology Generation (matching the Lawyer Baseline).

Thomson Reuters CoCounsel also demonstrated impressive capabilities, earning the highest score for Document Summarization (77.2%) and scoring consistently high across all four tasks it participated in (averaging 79.5%).

The Speed Factor: A Double-Edged Sword

Perhaps most striking is the efficiency advantage demonstrated by legal AI solutions. The tools performed tasks significantly faster than humans, ranging from six times faster at their slowest to an astonishing 80 times faster at their peak. This speed differential alone presents a compelling case for AI adoption, even where performance quality might be comparable.

But this very speed advantage creates a direct conflict with how law firms traditionally make money. Since most firms bill clients by the hour, technologies that complete tasks 6-80 times faster directly threaten revenue models. Partners face a difficult choice: adopt tools that dramatically reduce billable hours and potentially cut profits, or maintain slower manual processes to preserve income. Could a shift from traditional billable hours to value-based pricing finally become the norm?

Where Human Lawyers Still Excel

Despite AI's impressive showing, human lawyers maintained superiority in certain domains. Specifically, lawyers outperformed all AI tools in redlining (79.7%) and EDGAR research tasks (70.1%), suggesting these areas still require the nuanced judgment and contextual understanding that experienced legal professionals bring to the table.

For chronology generation, the results showed parity, with Harvey Assistant matching the lawyer baseline at 80.2% - indicating that in this area, AI has already achieved performance equivalent to human experts.

Research Remains Challenging for AI

The study challenges conventional wisdom regarding AI's application to legal research. While many have assumed research to be an ideal use case for AI in legal settings, the VLAIR study indicates that AI still falls short of expectations in complex legal research tasks.

This is particularly evident in the EDGAR research task, which involves multiple research steps and iterative decision-making. Only one legal AI tool (Oliver) attempted the challenge and scored well below the lawyer baseline (55.2% vs 70.1%).

Is this surprising? Not entirely. The challenge of navigating complex research requires contextual understanding and judgment calls that current AI systems struggle with. Increased performance may require further advancement in the nascent field of AI agents and agentic workflows.

Legal AI: The Build vs. Buy Question

One question not addressed in the VLAIR study is whether specialized legal AI tools provide significant advantages over properly prompted base models like GPT-4o or Claude.

Should law firms invest in specialized legal AI tools, or can they achieve similar results with foundation models using appropriate context and prompting? This remains an open question that merits further investigation.

For organizations considering this choice, several factors come into play:

- Specialized legal AI tools offer ready-to-deploy functionality, purpose-built legal features, vendor support, and risk mitigation through compliance guarantees.

- Meanwhile, the "build" approach using foundation models potentially offers cost efficiency, customization control, data sovereignty, integration flexibility, and the ability to incorporate institutional knowledge.

The optimal approach likely varies based on technical capacity, practice specificity, volume of use, risk tolerance, and security requirements - dimensions not fully captured in the VLAIR study's tool-versus-human comparison.

Real-World Implementation Challenges

How do these tools perform outside carefully controlled testing environments? Real-world legal workflows are rarely clean or linear. Data is often incomplete, contracts are inconsistent, and client-specific preferences may override standard processes.

The ability of AI tools to handle these messy real-world scenarios remains a critical question for firms considering adoption. The VLAIR study acknowledges this limitation, noting room for improvement in both evaluation methods and tool performance.

Implications for Different Legal Settings

For large law firms, the study effectively eliminates reasonable doubt about AI's utility in legal work. Resources should be directed toward tools that excel in the high-performing areas identified: data extraction, document Q&A, summarization, and transcript analysis.

But what about smaller firms? A notable gap in the current VLAIR study is its focus on tools primarily used by larger organizations. Scalable, cost-effective AI implementation is possible for small firms but may require different approaches than those highlighted in the study.

Similarly, in-house legal teams may find that the evaluated tools don't reflect what corporate legal departments actually use. This highlights the diversity of approaches to AI adoption across different segments of the legal profession.

The Path Forward: Strategic Symbiosis

The VLAIR study marks a turning point in the evidence-based evaluation of AI's capability to transform legal practice. Without suggesting that legal AI will replace lawyers entirely, the report clearly shows where AI can enhance legal work - delivering similar or better results much more efficiently.

The evidence points toward a future where lawyers and AI tools work in a symbiotic partnership. AI excels at quickly processing massive amounts of data and spotting patterns, while human lawyers provide judgment, creativity, and ethical reasoning for complex legal issues.

For the legal industry, the path forward involves careful integration of these tools into existing workflows, ongoing evaluation of their performance, and a willingness to experiment with new approaches. The question isn't whether AI adds value to legal practice but how to best use its capabilities while preserving the human judgment and expertise that remain crucial to quality legal work.

Read the VLAIR study in full: https://www.vals.ai/vlair

Author bio

Ricci Masero: With a track record spanning over two decades in marketing and communications, Ricci is a visionary Chartered Marketer. His insights and thought leadership have secured features on some of the most prominent platforms in the business world - including Forbes, The Learning Guild, Training Journal, Entrepreneur, Training Magazine, HR Zone, and AI Journal. He is currently focused on cloud-based learning technology at Intellek, who support law firms and LegalTech vendors with the means to streamline onboarding processes, supercharge adoption rates, and promote a culture of continuous learning.

#GenerativeAI

#Just-in-Time

#FutureandEmergingTechnologies

#BlogPost

#GlobalPerspective

#ArtificialIntelligence